Having just finished a customer POC using Power BI I wanted to share my thoughts on the toolset. I feel confident that Microsoft are moving in the right direction with Power BI and that its objectives, deliver self-service and mobile BI, is exactly what a lot of my customers want. To do that wrapped in the familiar Excel and bundled up with new licensing model options with Office 365 it is an exciting consideration.

Where to Begin?

My first issue with Power BI is that the overall messaging and marketing is confusing. I am fairly competent but trying to set up my Office 365 trial with Power BI took some getting my head around. However I am also typical IT man in that I try never to read the getting started guide. After an hour of faffing around I went to the Power BI getting started guide and it helped, a lot!

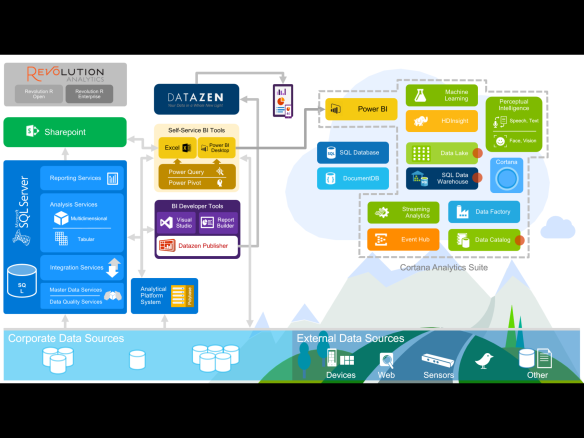

To understand it more I put together the following diagram that hopefully helps, please note this is not an overview of the whole of Power BI just the elements we covered in this POC:

Basically Power BI is an app that runs inside SharePoint online. You get access to SharePoint online if you sign up for an Office 365 subscription (can be paid monthly or annually). With Office 365 you can use Office apps online or download them to your desktop. To be able to use the Power BI Excel add-ins (Power View, Power Pivot, Power Query, Power Map) you will need Office 365 Professional Plus. Unfortunately even for users that will simply consume reports/dashboards through your Power BI site you will need the add-on to your subscription for Power BI (tenant). Again this is something that should be looked at by Microsoft.

Power BI components, utilised during this POC:

Power Pivot – probably needs no introduction but is a data modelling add-in for Excel that allows users to bring together data from multiple sources, relate it, extend it and add calculations using DAX.

Power View – a dashboarding and visualisation tool (perhaps the same thing) that can source data from Excel, Power Pivot or SSAS Tabular DBs (in SharePoint on-premise, integrated mode it runs inside SSRS and can work with SSAS Multidimensional DBs). Power View has some great charting functions and allows relating dashboard items, advanced filters, Bing maps, play axis and slicers across the whole dashboard.

Power Query – this is a self service ETL tool (to some degree) it allows you to connect out to the internet (except Twitter at this point contrary to all the pre-sales demos showing you Twitter feeds, to get this currently you will need a third party connector) and grab tables and lists of data. Once you have it you can add it to Power Pivot models and you can then analyse the data using Excel and Power View, for example.

Power Map – this is my least used tool that looks great in demos but I have yet to see a use over and above Bing Maps in Power View. It ultimately works in a similar way to bing maps in Power View in that you can plot locations and look at measures on a map. The key benefit to Power Map is that you can then record a “tour” where you can record your analyse around the map and then save this out to a video for example.

So to get started with Power BI you go get yourself a trial of Office 365, add the Power BI functionality to it, follow the getting started guide and then start building out some Power Pivot models in Excel that you can use as a source for reports and dashboards in Power View.

The final piece to the Power BI puzzle that I found really great is the Windows 8.1 Power BI app. Again this will be available for iOS and Android later this year. What this allows is the user of the app to browse to the SharePoint online site and feature reports from that site in the app.

What went right, what went wrong?

So back to the proof of concept. It took us about 1/2 a day to construct a very basic SharePoint site that had the Power BI app enabled. We downloaded and installed Office 365 Pro Plus on our desktop and then it took a lot more time to try and come up with useful content. Our major issue is that we were trying to use this to surface our SSAS 2012 Multidimensional cube. We have a large(ish) cube with (around 40gbs) with a lot of data. However the biggest flaw with Power BI, today, is that you cannot connect to on-premise SSAS databases directly. You have to either create subsets of data for each and every report you need in an embedded Power Pivot model or you have to try and create oData feeds of the data you want to use. There is a potentially useful download called the Microsoft Data Management Gateway that does allow you to set up an encrypted link between your on-premise SQL Server or Oracle databases (and oData feeds) but as yet this doesn’t allow for connection to SSAS so we were not able to make use of it.

The other massive benefit my customer, and most other customers, is the ability to have true mobile BI. Ultimately the pieces they need to access are Excel, Power View and SSRS. And unfortunately where they need them is on an iPad! This customer actually wanted to see Excel and Power View reports on the Nexus as well. Power View can be rendered in HTML5 however it warns you when you use this view that some things may not work (most notably the play axis, although I still cant find a real world use for this) we found it most cumbersome around Bing Maps in Power View. Having done some mobile app development I know how hard it is to make sure an app functions and offers a similar user experience across browsers and devices, however with Microsoft’s resources they need to get this right.

Finally the biggest drawback to the whole POC was performance and usability to actually get to the report. From a mobile BI perspective what we want is our analyst to create a report in Excel, check (once we gave them a Power Pivot model). The ability of said analyst to publish the Power View dashboard to the Power BI site, check. Finally an alert for our end users there was a new report and to go look at it, nope. Ok so can the analysts simply click a link to our Power BI site and look at a list of reports, not quite! They have to login to our SharePoint online team site (we don’t want this at all) and then launch Power BI from the left hand links. The user experience here is not just too many clicks but also conflicting look/feel. The team site can be customised and made to look almost corporate, in true SharePoint fashion, however the Power BI site cannot be and looks blue and white. Don’t get me wrong it looks ok, but this is a real world business. The point of our mobile BI piece is for senior execs to be able to launch a report from their iPad on the golf course and get a glance at how their business is going, if they have to go through a Microsoft branded page they are not going to be terribly impressed. And the speed… the lack of it. Loading the team site, slow. Loading the Power BI site, slow. Loading the reports, slower. Even, when running HTML5 as opposed to Silverlight, interacting with a Power View dashboard was slow. This needs to be looked at and fixed. Alternatively give us a link directly to a single report, we can make those look corporate and hopefully we can push them to use Surfaces and use IE and Silverlight so interaction is fast!

There were other general things that can be summarised here:

1. Inability to link to on-premise data in the form of the SSAS cube.

2. General site performance – Loading the Team site was slow (took nn), loading the Power BI page took nn, loading reports took too long.

3. Too many clicks to get to a report

4. No ability to share a report via email link (to take the user straight to the report)

5. Power BI site not able to be customized inline with SharePoint look/feel, based on corporate requirements.

6. Featured Reports option not working on Android and Apple devices.

7. No ability to remove Featured Reports.

8. Inability to connect directly to Twitter feeds from Power Query and therefore link to a Power Pivot model and visualize through Power View.

9. Bing maps viewed excellently in Windows app and through Silverlight but through HTML5 they were not responsive enough, issues with pinching to zoom, moving the map around with touch and issues when changing filter it not always refreshing the map points.

10. Interacting with drillable charts in Power View (in HTML5) on the iPad and Nexus was tricky, sometimes the drill worked other times it highlighted the slice/column.

To Power BI or Not to Power BI?

In short my customer chose not to Power BI at this time and I agree with them. Obviously depending on your customers or business needs this decision may be different. But with the issues we encountered it wasn’t a viable option for end user reporting and dashboarding. BUT… I have it on great authority that a lot of the issues we found will be fixed in coming release(s). In fact if you read Chris Webb’s blog post a few of the issues we encountered are mentioned at the recent PASS summit.

I have advised my customer to wait until said release(s) and to try again with our POC upon their potential new release (perhaps mid-July as the rumour mill suggests?). This coupled with the relative cost versus alternatives such as QlikView and Tableau AND the fact that most companies are looking for a suitable upgrade path for MS Office means that people will use this toolset, and rightly so. If Microsoft can make v2 with all the required enhancements they will have a truly amazing BI stack, imo the best in the marketplace. Lets cross our fingers and hope July is not just sunny but Power BI v2 comes out and knocks us all down!

As usual any feedback or tips very much appreciated!

P.S – It would also be so wonderful if I could extend my trial. Now I am looking at having to re-do all my POC work, from scratch, in July!

P.P.S – Check out Microsoft’s latest guide on the BI Tool Use